Sam Hatfield joined ECMWF in October 2019 and is developing a single-precision version of the global ocean model NEMO. (Image copyright: CSCS / Marco Abram)

ECMWF’s Annual Seminar from 14 to 18 September 2020 focuses on recent progress and future prospects in numerical methods for atmospheric and oceanic modelling. Sam Hatfield, one of the organisers of this year’s event, is looking at ways of reducing the computational cost of producing weather forecasts by using lower precision in the calculations.

Reducing precision without degrading the forecast skill is a delicate procedure that nevertheless can deliver significant advantages to operational numerical weather prediction (NWP).

“The idea of reducing precision is very much related to some of the changes that supercomputers are going through at the moment,” explains Sam. “Supercomputer developers are branching out into different areas of hardware that are able to take advantage of reduced precision arithmetic.”

Sam’s first experience of using supercomputers came during his physics degree at the University of Bristol. As a teenager he had enjoyed reading popular science books, with one about Einstein’s ideas on quantum mechanics sparking an interest in physics in particular. “For my fourth-year project at Bristol I was given my least-preferred option,” Sam recalls, “but I actually really enjoyed it.” Using a supercomputer to simulate DNA molecules, he was fascinated by how the properties of the molecules emerged from the interactions of their constituent parts.

Following his degree, Sam spent a year working in software development before embarking on a doctorate in environmental research at the University of Oxford, where he first became aware of ECMWF. His supervisors Tim Palmer and Peter Dueben and others had already been looking at ways of speeding up the high-resolution models that produce weather forecasts and suggested Sam could focus on the data assimilation part of the process.

Data assimilation at ECMWF and many other operational forecasting centres accounts for half the computational cost of weather forecasts.

During his PhD studies at Oxford, Sam and his colleagues built their own supercomputer, the Raspberry PiPS (Raspberry Pi Planet Simulator) system, in a bid to understand how supercomputers were made and how to get the most out of them for Earth system modelling.

From simple toy to high resolution

Starting with a drastically simplified toy model that very vaguely captured some of the aspects of full meteorological models, Sam began experimenting with ways of reducing the cost of data assimilation by reducing precision.

“I basically took the computations and gradually lowered the tuning knob of precision to see when it would break,” he says. “I found I could go all the way down to 16 bits – half precision. This is a quarter of the precision we usually use to produce weather forecasts.”

Later experiments on a simplified atmospheric model called SPEEDY produced similar results and also gave Sam the opportunity to spend two months in Japan at the RIKEN Advanced Institute for Computational Science (AICS) in Takemasa Miyoshi's research team: “We found we can use much lower precision than we realise for data assimilation,” explains Sam. “Precision doesn’t particularly matter because there are so many other sources of uncertainty that swamp the uncertainty you get from using lower precision in the calculations.”

Like ECMWF’s Integrated Forecasting System (IFS), SPEEDY has a spectral dynamical core. Sam and his co-researcher Mat Chantry at Oxford found that when these models are run at very high resolution, a part of the model called the 'Legendre transform' becomes very expensive much faster than any of the other areas. Could they use some sort of reduced precision arithmetic to accelerate that part of the model?

Noting that the Legendre transform computation in the IFS is very similar to the matrix multiplications used in artificial intelligence (AI), they experimented to see whether they could use hardware designed for machine learning to accelerate the IFS, with exciting results.

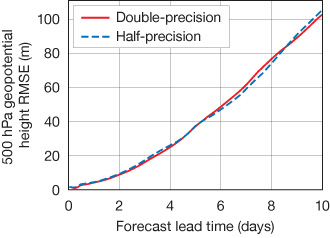

“It worked: we were able to use half precision all the way up to 9 km resolution. As far as I can tell, no one has gotten away with using such a low precision in such a high complexity, high-resolution model before.”

The chart shows the root-mean-square error (RMSE) of the 500 hPa geopotential height for weather forecasts run at 9 km (TCo1279) resolution with double and half-precision Legendre transforms, verified with respect to analysis.

To save time, this research was done using a software emulator rather than real GPUs or AI hardware. ECMWF scientists hope to run these experiments next year on the Summit supercomputer in the United States as part of an INCITE programme award. Sam is confident that the idea of using machine learning chips to accelerate the weather model will work and says the results could influence later high-performance computing procurements.

During this time at Oxford, Sam was able to work closely with experts at ECMWF and attended several training courses and Annual Seminars. When a position in ECMWF’s numerical methods group arose last year, the timing and subject matter worked out well for Sam.

Forensic approach

When Sam joined ECMWF in October 2019, work to prepare for the use of single precision operationally in the atmospheric model of the IFS was already well under way. He was tasked with developing a single-precision version of NEMO, a community ocean model used operationally at ECMWF.

This would be quite straightforward, he thought, because the groundwork had already been done at other centres, and he could work with the Barcelona Supercomputing Center (BSC), which had been running NEMO at single precision quite successfully for several years.

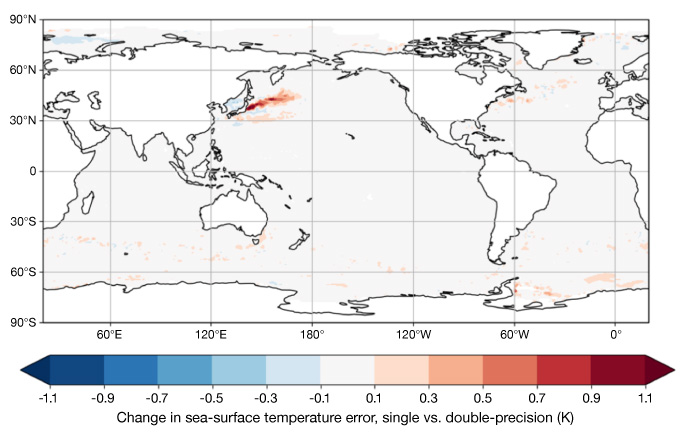

But when this model was put back into ECMWF’s operational system, two parts turned out to be particularly troublesome with single precision: the sea ice model and the icebergs functionality.

“It was a baptism of fire,” Sam admits. “Although I was confident with Fortran and computing in general, I had never debugged such a complex application.”

It took three to four months of technical work to get the model running stably in single precision for long periods of time. After a frustrating couple of months, Sam developed an almost forensic approach to isolating faults, helped by meticulous note-keeping.

Another breakthrough came from the decision to run the single-precision NEMO model within ECMWF’s experimentation system rather than separately. This allowed him to reduce the feedback time and find bugs much more quickly: “The first 40-year simulation, including bug fixing, took about four weeks at low resolution. The next one went through in ten days without any bugs at all, that was very satisfying.”

The chart shows the change in sea-surface temperature (SST) error (K) compared to observations when moving from double precision to single precision for NEMO simulations at the high-resolution operational configuration of 0.25 degrees global resolution, for a 40-year period. The change in error is practically zero across most of the globe, apart from the area around Japan, which is still under investigation.

All the bugs found during the arduous debugging process were reported to the NEMO consortium through the BSC and are now back in the NEMO source repository.

Towards single-precision coupled simulations

Single-precision NEMO integrations are now stable at the operational resolution of 0.25 degrees. Sam is working with Kristian Mogensen to establish the technical infrastructure that will allow them to run the first ever fully single-precision coupled atmosphere–ocean simulations. This could be beneficial for operations, especially for the seasonal forecasting system, where the ocean model accounts for about 60% of the computational cost.

In his Annual Seminar talk, Sam will present research from his PhD at Oxford and give an update on the status of single precision in the atmospheric and ocean models used in ECMWF’s IFS. He will also touch on supercomputing trends and history.

“The idea of reducing precision is not simply an academic exercise or one that is limited to operational meteorology. It will be a very important theme in high-performance computing in general over the next 10 to 20 years.”

Sam is funded through the ESiWACE2 project. ESiWACE2 has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 823988.